Stats, variance, and not always trusting the numbers

An intro to the TPRR pieces, which became its own post

We’re almost to that time. I’m pretty stoked to be going through all the TPRR data with you soon, and I know most of you have been around for a little while and know what these pieces are. But I do still see the posts and commentary that stats like TPRR aren’t the best stats because they aren’t the stickiest, or aren’t the most predictive of Year N+1 fantasy points, so I started to do a little intro about that, and it got long — as my intros do — and so I’ve split it out into its own post.

A lot of this post is going to reject basic analytical assumptions that the entire fantasy football industry seems to make. It feels like the right time of year to dive into this kind of thing, because I allude to it at times during the season, but here in February I can roll my sleeves up a little bit and talk about how we analyze this stuff.

First of all, the stickiest, or most predictive of Year N+1 fantasy points, are factors that quite simply don’t matter for identifying “the best” stats. That sounds aggressive, but I don’t know how else to put that. People make that assumption, and spend so much time comparing stats to one another based on marginal differences in the measured r-squared, and none of it really improves how to apply the stat. Or that gets lost. A ton of those analysts don’t actually make strong predictions — I see the predictions, in real time — but they will get extremely argumentative about what the best stats are.

Digging into correlations and r-squared figures for a variety of stats is extremely common, and leads to splitting hairs among various stats that in the grand scheme of things grade similarly. TPRR often shows up as a second-tier stat in these analyses, but it importantly never shows up as useless. And really the differences in these stats should be more about that: Is there predictiveness or is it all variance? And then it should shift to: How can we apply it? Because for TPRR, as I’ve written before, the additional context is all about aDOT and efficiency, which are things I do like being isolated outside the metric.

So if you do hear people bashing TPRR within these analyses of “the best stats,” consider that they likely can’t — and don’t — apply their “better” stats to make more accurate predictions. That’s because even “the best” stats have a ton of unexplained variance. Full stop. That’s football.

Context will always be highly relevant to football data and the raw numbers can never tell the whole story, which anyone who actually watches the sport religiously inherently understands. What matters is how we can use the stats we have available to analyze. We’re trying to predict outcomes. That’s it, the whole game. And unless you believe looking at one single stat will tell you everything — and you absolutely shouldn’t because that’s gotten people into trouble time and again — all you should care about is proving that a stat has value, and then how you can apply it.

Basically all of the data analyses you’ll see are based on the same core stats, and observed outcomes. It’s the same targets, receptions, yards, and touchdowns results, cut into 95 different splices to double- and triple- and quadruple-count the observable stuff, while failing to accept there is always unexplained variance. Rather than continuing to count the same observable stuff over and over — and argue about the marginal differences of the measured predictiveness of the various ways to splice that data — the edge unequivocally lies in wading into the unknown part of the equation.

This is why I have such respect for TPRR as a stat. All of those 95 different splices do tend to agree on one fact: Targets are incredibly valuable. Volume is earned, or maybe first-read targets argue it’s not and it’s just that the team knows who they want to get the targets, or maybe that data is being overemphasized — there are always new stats and new disagreements as every adderall-enhanced snake oil salesman tries to prove his data concoction most potent, but what’s true and undeniable and sustains — and I use that word intentionally because while some of the new stats du jour do move the discourse forward, others (and I’d wager more) that are touted as revolutionary and serve as fodder for a round of (ultimately unhelpful) content just ingloriously fade out — is that targets matter. And that good players get targets.

Targets per route run simplifies this. Beyond the scope of TPRR is a ton of context, all of which I believe is relevant, but all of which needs to be respected within the scope of the individual analysis.

[A transition while I get a salty for a moment.]

Other analysts will throw in more layers of information into one overarching stat, positioning themselves as more thorough while in actuality trying to create something that allows them to be lazier. You will read arguments for one player, or a couple players, that some miracle stat loves. The worst of these will position that analysis as superior to anything else, despite its nature of removing all the potential analysis of that unexplained variance to merely whittle it down into something that squeezes just a drop more r-squared into the equation.

But all of that unexplained variance exists because differences exist. I’ve written before about how much I’ve enjoyed attempts to bucket and classify different types of pass-catchers, like a slot receiver with a low aDOT and strong YAC versus an outside WR who is merely a deep speed threat. But that stuff is not even simple. A slot receiver with strong YAC is demonstrably different than one without it. An outside WR who is merely a deep speed threat is demonstrably different than one with those same skills but who also possesses the route-running ability to earn targets in the short and intermediate areas. In these examples we can be talking about the differences between, like, Amon-Ra St. Brown and Hunter Renfrow (and I mention Renfrow remembering how people loved his YAC early in his career, despite it being legit two slants he took to the house in one season, and not a down-by-down sustainable skill).

I’ve written Stealing Signals for seven years, and sometimes I think stuff that I’ve come to understand is more widely known that it is, and sometimes I just lack conviction and suppress the nagging idea I might feel strongly that everyone else is missing something, until a point at which it kind of boils over and suddenly I sound arrogant as hell. You’re familiar with my style if you’re reading me in February, but this is how I communicate hard-won domain knowledge from a ton of good work. I wish I could do it more elegantly.

What I believe strongly is that you can’t boil down the WR position, or pass-catching stats, or any of this stuff into all-encompassing stats. ARSB is playing a different position entirely than an outside receiver, but even the different slot guys are different, and among the outside guys even if we set aside weight and ignore how different like a Marquise Brown is, there’s just such a difference between even A.J. Brown and Mike Evans. And I’m talking about role, but also those are guys who have transcended multiple different types of offenses and QBs, and continued to win in ways that are unique to them — Evans is this high-aDOT guy who still dominates targets in a way a lot of the high-aDOT guys don’t, and Brown is similar but with less of a strict downfield profile and with a major edge in ball-in-hand YAC ability. But I mean, the nuances of their profiles isn’t the point, obviously. I’m just saying you could bucket those two together and still not be able to parse what makes each of them unique and helps them win.

My point is a broader one. If you haven’t noticed, I grow increasingly disenchanted with the analysis put forth in my industry by the year, and frankly the day. There are only so many takes, but there are far more takesmen, and it’s really easy to cook up some nonsense and then just get to the conclusion you wanted to get to, and really in the end it’s all about are you in or out on the player, right? Except also, there’s the cost element — even great bets can be overpriced, and unideal bets can get cheap enough they make sense.

You wind up with both unnecessary complexity and also the scourge of simplicity. On one hand, new stats that wouldn’t possibly stand up to rigorous review, which told you to draft one specific player that did hit and are now preached as bible, until they inevitably strike out in some future season and are thrown on the scrap heap for the next great thing. On the other hand, the people who claim victory for being in on players when they didn’t really analyze anything, and the player did stuff they never really articulated with any detail, because it’s all as simple as “I told you to draft that guy.” It feels like no one is analyzing the analysis, whether simple or complex. I mean, why would you? If you did, you’d see quickly that half the people are never showing their work (for a reason), and the other half might as well not be, because their logic is the exact reason “show your work” became a thing in schools, where any good teacher would look at their nonsense and mark down partial points even if they had the right answer (and then probably question who they were cheating off to get to that answer, given their process was all wrong). “Oh, you got the answer right, but you can’t show me any repeatable step as to how you got there? That’s fishy.”

It will just never not be frustrating to me where the incentives are in this industry, and I mean it makes sense that the brightest minds often seem to just move onto other pursuits.

One of the things that dramatically impacts my saltiness above is that getting the right answer doesn’t always follow from strong process. And a big part of that isn’t a fun answer, and just sounds like bitching: Variance.

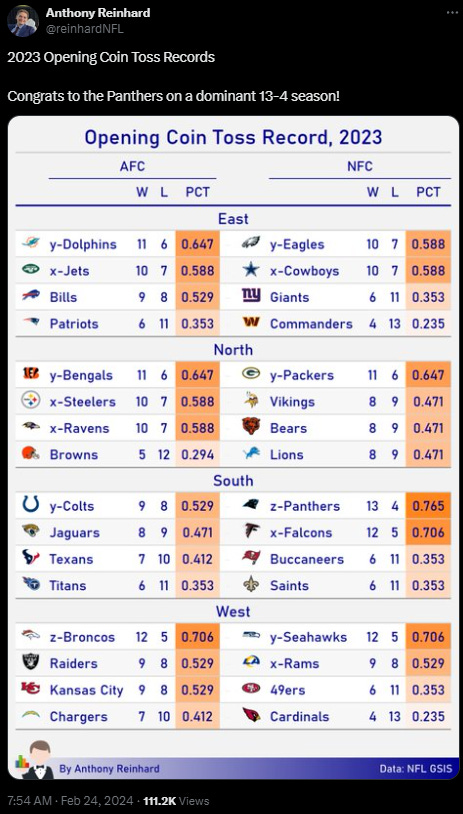

My favorite Tweet from the offseason so far comes from Anthony Reinhard.

I can’t begin to tell you how much I’ve loved thinking about this. It’s not just the Panthers at 13-4, or the Commanders and Cardinals at 4-13, because I think we all understand outliers by now, and what tail risk is, and how in a normal distribution there are going to be instances where “luck” is pushed from from the mean. I saw a reply mentioning the 2022 Vikings, which is a great example of a situation where there was plenty of publicity given to the extreme outcome (for those that don’t remember, it was very popular to note that Vikings team had a W-L record that was much better than the peripherals suggested they actually were, and then they did inevitably get upset at home in the first round of the playoffs to an equally fraudulent Giants team).

So we all understand you can flip a coin 10 times and get heads eight. That’s like the first step of understanding variance, and luck, and these factors that no one wants to talk about, because trying to attribute “bad luck” to anything just sounds like sour grapes. Be better. This is America, damnit.

But it’s not about complaining about past results. There’s so much value in understanding this for predicting the future. As you start to dig into those figures, the part that really fascinates me is the whole distribution, i.e. how often things deviated from 50/50 or close to it. It’s so easy to assume that over an NFL season, luck-based factors will even out. But these are coinflips! And the fact of the matter is, they do not. And if you understand that fact, you understand that you should expect to find things like this in any data.

When you apply it to players, the use cases are so obvious. The feeling among analysts is if someone isn’t running well for a period of time, either that will level off, or else they just suck. But again, look harder at this coinflip data. In a sample of just 32 teams — and with only 17 data points that very much resembles an NFL season (because it’s obviously data from an NFL season) — you wind up with three teams at 4-13 or 13-4, and four more at 5-12 or 12-5. That’s seven teams — nearly a quarter of the league — where variance pushed an outcome that we might have expected to balance close to 50/50 over the course of a season out beyond 70/30 in one direction or another.

But go one step further. There are nine more teams at 6-11 or 11-6, which brings us to 16 teams — half the league — that were roughly 65/35 or further in one direction, either “good” or “bad” luck. If we set aside the fact that winning the coin toss isn’t that relevant to winning games, and pretend that it’s worth a lot — like how fumble luck has been shown to be highly variable, or key drops on third downs, or what happens in the kicking game, or just one-score outcomes in general, which obviously are heavily influenced by these possession-defining moments like a key lost fumble, a drop, or a missed FG here or there — and then we think about this distribution and what it’s telling us, it’s a really fascinating point to consider. We should expect that pretty damn often, luck-based things will play a huge role.

In a game, maybe a team should win 60% of the time given the way they played — that’s equivalent to 10.2 wins — but they run on the 35% side of the 65/35 split (or further — maybe they are 20% lucky and 80% unlucky). Suddenly they are like a 6-11 team. (Remember those Chargers’ teams, especially that one year where they missed like six late field goals in close games?)

The point is, it always feels like excuse-making to say a team isn’t what its record says, and instead they’ve run hot or cold or what have you. But we should expect there are teams like this that exist. That’s what this coin flip data is telling us. These teams exist, and it’s half the league(!) that is being meaningfully impacted either positively or negatively, such that their record isn’t actually telling us what we think it is. Take a second to really comprehend the impact of that. By the way, this dovetails with my research on how hard it is to project team volume, where I’ve shown past projections missing substantially on about half the league in a given year.

Now step aside from the 32 teams and think about a sample of hundreds of players. Do you see where I’m going with this? It’s the unexplained variance, right? This by the way dovetails with the stuff I tried to articulate in my writing about “situation regression” a few years ago, but honestly that’s not the best way to articulate it.

My point is merely we should expect that a big chunk of players — perhaps half! — had stats that were influenced in some way by factors that were probably not relevant. And that’s largely a sample-size issue, right? Anthony’s coinflip data would look less extreme if teams played 162 games like baseball. We’re essentially just saying that there’s a lot we can’t possibly know, and it gets more extreme when we talk about the player level because we have to consider different systems, coaches, schemes, and usage. The actual observed data gives us our best clue about the player, but we can look at that coinflip data and recognize the unexplained variance in a short football season.

Two things before I throw another divider and slightly shift gears. First, when I say there’s a big chunk of players that had stats that were influenced by factors that were probably not relevant, one important question is: Relevant to what? Specifically to their ability to put up the kinds of monster fantasy seasons we want in the future. Because that’s ultimately what we’re always searching for, right? I’ve written time and again about the idea of chasing upside.

We should expect that probably half the players had stats that were influenced in some way by some factors that were probably not relevant to whether they are capable of putting up the kinds of monster fantasy seasons we want in the future. But so, so much of the analysis you will see will parse the slight differences in player profiles as if marginal rate changes give us an ability to ordinally rank players, rather than group breakout potential more abstractly. I’ll get into this more in a second.

The second obvious ramification here is to consider cost. And when you do that, you recognize why I’m making such a big deal of this. Player cost is a market-based element that is highly influenced by the actual observed data. Of course it is. But if we inherently understand that there are players whose data isn’t going to reliably express what a larger-sample reflection of their actual statistical ability is, then we can also make very specific bets within the market. I’ll get more into this as well.

Some specific examples of the last two points, both from last offseason. You read me write about both of these like half a dozen times, trying to articulate risks in relation to rookie-season data.

This time last year, it was cliche among sharp analysts to point out that, actually, Chris Olave had a better rookie season than Garrett Wilson (something I covered at length in the Jets’ section of last year’s TPRR piece). This is a minor point because Wilson still got drafted higher in fantasy, but many argued Olave should be ranked higher, both in redraft and dynasty, and for reasons that didn’t allow for the reality that 65/35 outcomes happen.

Now, Olave actually scored more points than Wilson again, but he didn’t take that huge step forward that his rookie-year data suggested might be possible, and what seems to be the consensus now is that if Aaron Rodgers had stayed healthy, Wilson probably would have been the one of these two with a real ceiling.

What I think I’d argue, to get back to the coinflip stuff, is Olave’s rookie year was more on the 65% side of a 65/35, whereas Wilson’s was more on the 35% side (or, honestly, more like the 20% side). I think Olave’s Year 2, interestingly enough, probably shifted back to the 35% side (and Wilson was probably a second straight 20%, which again, should not be seen as excuse-making but legitimate analysis as I try to project Year 3 potential; I truly believe Wilson to be a guy who has been a 4-13 coinflip outcome guy for each of his first two years, and there’s obviously some correlation there as it relates to playing a lot of those snaps in both years with Zach Wilson).

So the point is, there’s so much variance, and there are error bars in what the observed data actually tells us, and so much of what I do around here is just opining on what I think those error bars are. It’s why I take a foundational stat like TPRR and then write about every team, and every player, and provide the context I think is relevant to those situations. It’s a lot to parse, I get it.

But as I was highlighting earlier about those who will essentially p-hack to show off the r-squared of their newest model, the perception of complexity of those analyses is a bug, not a feature, and is really just a way to automate stuff that shouldn’t be automated. And then those same analysts will frame proper use of a stat like TPRR as less than, by attacking the predictiveness of the stat. I get ornery about it because it’s frankly really fucking annoying. There’s not enough time in the day to get in the Twitter arguments that would be necessary to walk through how stupid these attacks on specific stats are, but then I will have people asking me about my work like it’s stuck in the dark ages because there are these “better” stats than TPRR floating around.

Again, this industry sucks. It’s not really merit-based, and there is absolutely an echo chamber that reinforces some really bad practices. I’m not trying to go all Jon Snow at the Battle of the Bastards on everyone I know and work with across the entire fantasy space, but I feel this stuff pretty strongly. And I see good analysts get worn down and learn the wrong lessons, and shift the wrong direction with stuff, because the feedback they get makes it clear the incentives are all out of whack.

Like, even with perfect analysis, there’s so much we don’t know about what these teams are going to do. It’s all hidden in secrecy, that’s what makes trying analyze it all so fun. So maybe your best work will put you at a 65% probability of being right on a call, but then remember what we talked about with the variance? I’ve done this for eight years. I should expect to have had seasons where I was no the 35% luck side of the 65/35 splits, and if my edge is only like a 65% probability of being right even when I don’t succumb to biases and issues with my process, getting hit with bad variance is going to make me look like an idiot whose predictions suck. I mean, that’s just the facts of it.

Now I’m writing about me generically here, where that’s true of every analyst. One of the reasons it’s easy for me to write about this clearly is I did have a great 2023 season, and probably was on at least the 80% side of 80/20 variance when you consider the two top-30 finishes in the FFPC Main Event and the top-10 finish in the NFC primetime, all with different comanagers (Silva in one, Siegele in another, Pat and Pete in the third). Since I last bragged about this, I heard from another Signals Gold subscriber, who also did one of the one-on-one draft consultations I do in August, which puts him in a group of maybe only a handful of subs that I worked most closely with. I’d already heard from another consultation + Gold subscriber who sent me a tip (“I always tip the dealer”) after winning his league, despite my protests. Anyway, here’s what David mentioned after the last time I wrote about the Stealing Signals successes:

You can include me among the "most loyal subscribers who also crushed". I just realized I never let you know:

I somehow won all 4 of my leagues this year, all with wildly different formats including a work Yahoo league with 48 teams split across 4 divisions and a keeper league in its 13th season that I hadn't made the playoffs in for the last 3 years. Thanks again for all your help, can't wait for next season.

I can’t even describe how fulfilling it is to hear this kind of stuff, especially from the people I get to work most closely with, and provide the most advice to. As I told David, he made the actual decisions and managed the teams himself. It’s also again true that my advice ran like 80% hot this year (which was needed after it undeniably ran cold in 2022).

Mostly I share this David anecdote because this is the stuff that offsets my complaints above, where I get a little too aggravated at the silliness of other analysts (and to be clear it’s not the ones no one is paying attention to, but the ones that are like 80% of the way there to good analysis, and can pose as sharp and build big followings despite never really moving the needle and more or less just throwing darts). But you guys make this stuff worth it, where realizing there are kind of a lot of people riding with me on these topics is super fulfilling.

Anyway, I had a second example of the points from the prior section, something I’d tried to articulate last year. Christian Watson looked incredible in most advanced stats after his rookie year, very much including TPRR, and I spent all offseason trying to say, “He’s probably not that good,” 1,500 different ways.

Really what I was trying to say was the coinflip point — that we should expect there to be players whose stats deviate from what they actually are, to a 65/35 or even greater degree. And then, crucially, that the market is not going to agree.

There was just no way for Watson to be cheap given his rookie-year 24.1% TPRR, but I argued every way I could that the baseline for his Year 2 TPRR should probably be quite a bit lower, and there wasn’t much room for him to progress from what very much felt like an inflated figure (for a lot of reasons, including very specific, weird stuff with Aaron Rodgers and Rookie of the Year commentary, etc., as well as the data I shared from Barnwell that talked about his rate of targets and production after games were decided, et al., which is to say that no I can’t give some formula to identify the next Watson, because it was the specifics of how his numbers were derived that led to that analysis; also, yes, I recognize he got hurt, but he got a good runout with Jordan Love and the offense looking very solid and yet he still struggled to separate from his young teammates when healthy, at least in the way a Year 2 WR who posted a 24.1% TPRR as a rookie should have been able to).

The point of the examples is to really drive home there is edge to be had in trying to identify the players we think experienced some form of positive or negative variance relative to their actual observed numbers. Does it mean we’re going to always be right? No. But the “best stats” with their high r-squared numbers aren’t right every time, either. I’d argue they are right less, and because they are following the crowd with ADPs more, that the impact of when they are right isn’t all that helpful anyway.

We of course still have to be respectful of what the numbers actually are. I’m never saying I’m going to take liberties to completely ignore stats, and when I go into these topics I always note that I sound a lot like some of the very worst analysts — the types who so obviously and willingly misconstrue data to fit predetermined narratives. Me saying “the data isn’t always perfect” isn’t that. It’s trying to look at the data in an intellectually honest way, understanding variance exists.

If I had a nickel for every time I had a disagreement with another fantasy analyst who believed the numbers were the end all, be all, and wanted examples of how players with those numbers did something specific (“no WR who has done that has ever done this,” etc.), I’d be a rich man. The counter to that kind of stuff is when there’s legit variance that impacted the numbers, we’re shoving a player into a cohort that he doesn’t belong in, either positively or negatively, and doesn’t actually reflect his chances of success in the future. It doesn’t mean that player is seven cohorts away (in fact, in almost all cases, it probably just means one cohort closer to the average, or that we need to regress a bit more in that specific case, which makes it so hard to argue the point anyway, because you’re like, “Yeah, I get it, the data does reflect some concern, but you’re embellishing it a bit and it’s not quite that bad”).

I recognize this might feel like cherry picking, and yet I’m writing this many words because I think it’s one of the most important things I can be writing about in February. I’d argue this stuff is the opposite of cherry picking. We know there are error bars. This is fact. And something that is true at the highest levels of data analysis is that data often needs to be cleaned.

Now, that doesn’t mean we can insert bias and just do whatever we want with data. But there’s a reason data sometimes needs to be cleaned! It’s a valid practice, when done right, with domain knowledge and care and a recognition of bias. One of the most impactful things I ever heard about data and modeling was that 80% of it is cleaning the data and 20% is “the sexy part” of picking an algorithm and applying it. And as I recall it, as we practiced that in a business setting, one of the things we’d do is replace missing data — the practice set was I think Titanic passenger data, and you’d use specific pieces of information and cohorts and inference to fill in what might be one area of missing data, so a whole passenger’s other information didn’t need to be omitted. You were literally guessing at an unknown, and those made-up figures were better for the analysis than omitting the other data relevant to that passenger and making the overall sample a little smaller in other ways. The point is, there was no good answer.

Basically everything I’ve written about today is an argument that we as an industry need to be willing to better clean our data. I don’t care about super high r-squared figures because the sample of players was extended so large that it’s really proud of being able to predict that Quez Watkins’ production was sticky year over year, or that Marquez Valdes-Scantling is Marquez Valdes-Scantling every year. I want to be understanding the data that is relevant to fantasy football so I can make predictions about who might be the most important players next year.

And to to that, I need to accept that there is variance in the past data I’m looking at, and that I need to roll up my sleeves and look for contextual answers. That’s what these TPRR posts are. It’s what my Stealing Signals posts are, in-season. If you read my TPRR posts, you will not be left with one headline name to go target (in such a way that his ADP is ruined before you have even drafted him), but rather a more comprehensive understanding of how to approach, well, everyone. And whole positional strengths and weaknesses and pockets of player value, and all of that.

I get frustrated because it’s not as marketable to write about every single player, but it’s so obviously more valuable. Understanding the data you are working with and expecting variance in the samples we’re dealing with is something that many are too close-minded to accept, but again, fantasy football is a market-based game. You need to seek edges where people are too close-minded. Most stuff is baked into the numbers, but this kind of abstract thing isn’t. Too many people are going to push back and say the numbers are firm truth.

So this was meant to be an intro for the upcoming TPRR pieces, which I fear I have now dramatically oversold. But while my analysis will in no way ultimately live up to the ranting I just did, this does serve as a commentary on what I’m trying to do with the TPRR pieces, and basically all of my work. I’ve made it clear I don’t care about TPRR’s predictiveness in a vacuum, but by way of further introduction, if you want more on why I believe TPRR is the best foundational stat to begin these analyses with, I’ve previously written about that many times, and the intro to the 2022 version of these posts included a great summary as well as links to older stuff I wrote on the subject.

I’ll be back soon and diving directly into the teams, and I’ll split those posts out as AFC and NFC because they do get pretty long. Can’t wait to get into the players, rather than the theory. Until next time!

Ben, really enjoyed reading this tonight. I haven’t been around your writings long but I’ve been playing FF for a long time. Your work gets me excited to keep playing for many years to come and helps me get a bigger picture than draft this player don’t draft this one.

This was my first full year of following along and first year of drafting best ball and I ended the year up 250% of what I started.

Looking forward to the TPPR posts!

Words to live by:

You wind up with both unnecessary complexity and also the scourge of simplicity. On one hand, new stats that wouldn’t possibly stand up to rigorous review, which told you to draft one specific player that did hit and are now preached as bible, until they inevitably strike out in some future season and are thrown on the scrap heap for the next great thing. On the other hand, the people who claim victory for being in on players when they didn’t really analyze anything, and the player did stuff they never really articulated with any detail, because it’s all as simple as “I told you to draft that guy.”